This tutorial is viewable at: http://swift-lang.org/tutorials/cray/swift-cray-tutorial.html

Introduction: Why Parallel Scripting?

Swift is a simple scripting language for executing many instances of ordinary application programs on distributed parallel resources. Swift scripts run many copies of ordinary programs concurrently, using statements like this:

foreach protein in proteinList {

runBLAST(protein);

}Swift acts like a structured "shell" language. It runs programs concurrently as soon as their inputs are available, reducing the need for complex parallel programming. Swift expresses your workflow in a portable fashion: The same script runs on multicore computers, clusters, clouds, grids, and supercomputers.

In this tutorial, you’ll be able to first try a few Swift examples (parts 1-3) on the Cray login host, to get a sense of the language. Then in parts 4-6 you’ll run similar workflows on Cray compute nodes, and see how more complex workflows can be expressed with Swift scripts.

Swift tutorial setup

To install the tutorial scripts on Cray "Raven" XE6-XK7 test system, do:

$ cd $HOME

$ tar xzf /home/users/p01537/swift-cray-tutorial.tgz

$ cd swift-cray-tutorial

$ source setup.sh # You must run this with "source" !Verify your environment

To verify that Swift (and the Java environment it requires) are working, do:

$ java -version # verify that you have Java (ideally Oracle JAVA 1.6 or later)

$ swift -version # verify that you have Swift 0.94.1|

|

If you re-login or open new ssh sessions, you must re-run source setup.sh in each ssh shell/window. |

To check out the tutorial scripts from SVN

If you later want to get the most recent version of this tutorial from the Swift Subversion repository, do:

$ svn co https://svn.ci.uchicago.edu/svn/vdl2/SwiftTutorials/swift-cray-tutorial Cray-SwiftThis will create a directory called "Cray-Swift" which contains all of the files used in this tutorial.

Simple "science applications" for the workflow tutorial

This tutorial is based on two intentionally trivial example programs,

simulation.sh and stats.sh, (implemented as bash shell scripts)

that serve as easy-to-understand proxies for real science

applications. These "programs" behave as follows.

simulate.sh

The simulation.sh script serves as a trivial proxy for any more complex scientific simulation application. It generates and prints a set of one or more random integers in the range [0-2^62) as controlled by its command line arguments, which are:

$ ./app/simulate.sh --help

./app/simulate.sh: usage:

-b|--bias offset bias: add this integer to all results [0]

-B|--biasfile file of integer biases to add to results [none]

-l|--log generate a log in stderr if not null [y]

-n|--nvalues print this many values per simulation [1]

-r|--range range (limit) of generated results [100]

-s|--seed use this integer [0..32767] as a seed [none]

-S|--seedfile use this file (containing integer seeds [0..32767]) one per line [none]

-t|--timesteps number of simulated "timesteps" in seconds (determines runtime) [1]

-x|--scale scale the results by this integer [1]

-h|-?|?|--help print this help

$All of thess arguments are optional, with default values indicated above as [n].

With no arguments, simulate.sh prints 1 number in the range of 1-100. Otherwise it generates n numbers of the form (R*scale)+bias where R is a random integer. By default it logs information about its execution environment to stderr. Here’s some examples of its usage:

$ simulate.sh 2>log

5

$ head -4 log

Called as: /home/wilde/swift/tut/CIC_2013-08-09/app/simulate.sh:

Start time: Thu Aug 22 12:40:24 CDT 2013

Running on node: login01.osgconnect.net

$ simulate.sh -n 4 -r 1000000 2>log

239454

386702

13849

873526

$ simulate.sh -n 3 -r 1000000 -x 100 2>log

6643700

62182300

5230600

$ simulate.sh -n 2 -r 1000 -x 1000 2>log

565000

636000

$ time simulate.sh -n 2 -r 1000 -x 1000 -t 3 2>log

336000

320000

real 0m3.012s

user 0m0.005s

sys 0m0.006sstats.sh

The stats.sh script serves as a trivial model of an "analysis" program. It reads N files each containing M integers and simply prints the\ average of all those numbers to stdout. Similarly to simulate.sh it logs environmental information to the stderr.

$ ls f*

f1 f2 f3 f4

$ cat f*

25

60

40

75

$ stats.sh f* 2>log

50Basic of the Swift language with local execution

A Summary of Swift in a nutshell

-

Swift scripts are text files ending in

.swiftTheswiftcommand runs on any host, and executes these scripts.swiftis a Java application, which you can install almost anywhere. On Linux, just unpack the distributiontarfile and add itsbin/directory to yourPATH. -

Swift scripts run ordinary applications, just like shell scripts do. Swift makes it easy to run these applications on parallel and remote computers (from laptops to supercomputers). If you can

sshto the system, Swift can likely run applications there. -

The details of where to run applications and how to get files back and forth are described in configuration files separate from your program. Swift speaks ssh, PBS, Condor, SLURM, LSF, SGE, Cobalt, and Globus to run applications, and scp, http, ftp, and GridFTP to move data.

-

The Swift language has 5 main data types:

boolean,int,string,float, andfile. Collections of these are dynamic, sparse arrays of arbitrary dimension and structures of scalars and/or arrays defined by thetypedeclaration. -

Swift file variables are "mapped" to external files. Swift sends files to and from remote systems for you automatically.

-

Swift variables are "single assignment": once you set them you can’t change them (in a given block of code). This makes Swift a natural, "parallel data flow" language. This programming model keeps your workflow scripts simple and easy to write and understand.

-

Swift lets you define functions to "wrap" application programs, and to cleanly structure more complex scripts. Swift

appfunctions take files and parameters as inputs and return files as outputs. -

A compact set of built-in functions for string and file manipulation, type conversions, high level IO, etc. is provided. Swift’s equivalent of

printf()istracef(), with limited and slightly different format codes. -

Swift’s

foreach {}statement is the main parallel workhorse of the language, and executes all iterations of the loop concurrently. The actual number of parallel tasks executed is based on available resources and settable "throttles". -

In fact, Swift conceptually executes all the statements, expressions and function calls in your program in parallel, based on data flow. These are similarly throttled based on available resources and settings.

-

Swift also has

ifandswitchstatements for conditional execution. These are seldom needed in simple workflows but they enable very dynamic workflow patterns to be specified.

We’ll see many of these points in action in the examples below. Lets get started!

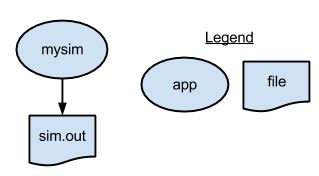

Part 1: Run a single application under Swift

The first swift script, p1.swift, runs simulate.sh to generate a single random number. It writes the number to a file.

type file;

app (file o) simulation ()

{

simulate stdout=@filename(o);

}

file f <"sim.out">;

f = simulation();To run this script, run the following command:

$ cd part01

$ swift p1.swift

Swift 0.94.1 RC2 swift-r6895 cog-r3765

RunID: 20130827-1413-oa6fdib2

Progress: time: Tue, 27 Aug 2013 14:13:33 -0500

Final status: Tue, 27 Aug 2013 14:13:33 -0500 Finished successfully:1

$ cat sim.out

84

$ swift p1.swift

$ cat sim.out

36To cleanup the directory and remove all outputs (including the log files and directories that Swift generates), run the cleanup script which is located in the tutorial PATH:

$ cleanup|

|

You’ll also find two Swift configuration files in each partNN

directory of this tutorial. These specify the environment-specific

details of where to find application programs (file apps) and where

to run them (file sites.xml). These files will be explained in more

detail in parts 4-6, and can be ignored for now. |

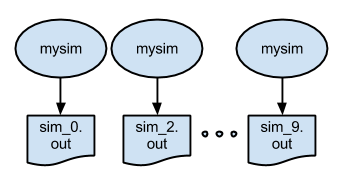

Part 2: Running an ensemble of many apps in parallel with a "foreach" loop

The p2.swift script introduces the foreach parallel iteration

construct to run many concurrent simulations.

type file;

app (file o) simulation ()

{

simulate stdout=@filename(o);

}

foreach i in [0:9] {

file f <single_file_mapper; file=@strcat("output/sim_",i,".out")>;

f = simulation();

}In part 2, we also update the apps file. Instead of using shell script (simulate.sh), we use the equivalent python version (simulate.py). The new apps file now looks like this:

localhost simulate simulate.pySwift does not need to know anything about the language an application is written in. The application can be written in Perl, Python, Java, Fortran, or any other language.

The script also shows an

example of naming the output files of an ensemble run. In this case, the output files will be named

output/sim_N.out.

To run the script and view the output:

$ cd ../part02

$ swift p2.swift

$ ls output

sim_0.out sim_1.out sim_2.out sim_3.out sim_4.out sim_5.out sim_6.out sim_7.out sim_8.out sim_9.out

$ more output/*

::::::::::::::

output/sim_0.out

::::::::::::::

44

::::::::::::::

output/sim_1.out

::::::::::::::

55

...

::::::::::::::

output/sim_9.out

::::::::::::::

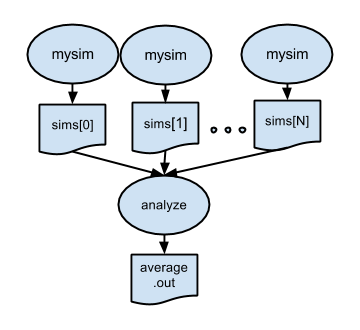

82Part 3: Analyzing results of a parallel ensemble

After all the parallel simulations in an ensemble run have completed,

its typically necessary to gather and analyze their results with some

kind of post-processing analysis program or script. p3.swift

introduces such a postprocessing step. In this case, the files created

by all of the parallel runs of simulation.sh will be averaged by by

the trivial "analysis application" stats.sh:

type file;

app (file o) simulation (int sim_steps, int sim_range, int sim_values)

{

simulate "--timesteps" sim_steps "--range" sim_range "--nvalues" sim_values stdout=@filename(o);

}

app (file o) analyze (file s[])

{

stats @filenames(s) stdout=@filename(o);

}

int nsim = @toInt(@arg("nsim","10"));

int steps = @toInt(@arg("steps","1"));

int range = @toInt(@arg("range","100"));

int values = @toInt(@arg("values","5"));

file sims[];

foreach i in [0:nsim-1] {

file simout <single_file_mapper; file=@strcat("output/sim_",i,".out")>;

simout = simulation(steps,range,values);

sims[i] = simout;

}

file stats<"output/average.out">;

stats = analyze(sims);To run:

$ cd part03

$ swift p3.swiftNote that in p3.swift we expose more of the capabilities of the

simulate.sh application to the simulation() app function:

app (file o) simulation (int sim_steps, int sim_range, int sim_values)

{

simulate "--timesteps" sim_steps "--range" sim_range "--nvalues" sim_values stdout=@filename(o);

}p3.swift also shows how to fetch application-specific values from

the swift command line in a Swift script using @arg() which

accepts a keyword-style argument and its default value:

int nsim = @toInt(@arg("nsim","10"));

int steps = @toInt(@arg("steps","1"));

int range = @toInt(@arg("range","100"));

int values = @toInt(@arg("values","5"));Now we can specify that more runs should be performed and that each should run for more timesteps, and produce more that one value each, within a specified range, using command line arguments placed after the Swift script name in the form -parameterName=value:

$ swift p3.swift -nsim=3 -steps=10 -values=4 -range=1000000

Swift 0.94.1 RC2 swift-r6895 cog-r3765

RunID: 20130827-1439-s3vvo809

Progress: time: Tue, 27 Aug 2013 14:39:42 -0500

Progress: time: Tue, 27 Aug 2013 14:39:53 -0500 Active:2 Stage out:1

Final status: Tue, 27 Aug 2013 14:39:53 -0500 Finished successfully:4

$ ls output/

average.out sim_0.out sim_1.out sim_2.out

$ more output/*

::::::::::::::

output/average.out

::::::::::::::

651368

::::::::::::::

output/sim_0.out

::::::::::::::

735700

886206

997391

982970

::::::::::::::

output/sim_1.out

::::::::::::::

260071

264195

869198

933537

::::::::::::::

output/sim_2.out

::::::::::::::

201806

213540

527576

944233Now try running (-nsim=) 100 simulations of (-steps=) 1 second each:

$ swift p3.swift -nsim=100 -steps=1

Swift 0.94.1 RC2 swift-r6895 cog-r3765

RunID: 20130827-1444-rq809ts6

Progress: time: Tue, 27 Aug 2013 14:44:55 -0500

Progress: time: Tue, 27 Aug 2013 14:44:56 -0500 Selecting site:79 Active:20 Stage out:1

Progress: time: Tue, 27 Aug 2013 14:44:58 -0500 Selecting site:58 Active:20 Stage out:1 Finished successfully:21

Progress: time: Tue, 27 Aug 2013 14:44:59 -0500 Selecting site:37 Active:20 Stage out:1 Finished successfully:42

Progress: time: Tue, 27 Aug 2013 14:45:00 -0500 Selecting site:16 Active:20 Stage out:1 Finished successfully:63

Progress: time: Tue, 27 Aug 2013 14:45:02 -0500 Active:15 Stage out:1 Finished successfully:84

Progress: time: Tue, 27 Aug 2013 14:45:03 -0500 Finished successfully:101

Final status: Tue, 27 Aug 2013 14:45:03 -0500 Finished successfully:101We can see from Swift’s "progress" status that the tutorial’s default

sites.xml parameters for local execution allow Swift to run up to 20

application invocations concurrently on the login node. We’ll look at

this in more detail in the next sections where we execute applications

on the site’s compute nodes.

Running applications on Cray compute nodes with Swift

Part 4: Running a parallel ensemble on Cray compute nodes

p4.swift will run our mock "simulation"

applications on Cray compute nodes. The script is similar to as

p3.swift, but specifies that each simulation app invocation should

additionally return the log file which the application writes to

stderr.

Now when you run swift p4.swift you’ll see that two types output

files will placed in the output/ directory: sim_N.out and

sim_N.log. The log files provide data on the runtime environment of

each app invocation. For example:

$ cat output/sim_0.log

Called as: /home/users/p01532/swift-cray-tutorial/app/simulate.sh: --timesteps 1 --range 100 --nvalues 5

Start time: Tue Aug 27 12:17:43 CDT 2013

Running on node: nid00018

Running as user: uid=61532(p01532) gid=61532 groups=61532

Simulation parameters:

bias=0

biasfile=none

initseed=none

log=yes

paramfile=none

range=100

scale=1

seedfile=none

timesteps=1

output width=8

Environment:

ALPS_APP_DEPTH=32

ASSEMBLER_X86_64=/opt/cray/cce/8.2.0.173/cray-binutils/x86_64-unknown-linux-gnu/bin/as

ASYNCPE_DIR=/opt/cray/xt-asyncpe/5.23.02

ASYNCPE_VERSION=5.23.02

...To tell Swift to run the apps on compute nodes, we specify in the

apps file that the apps should be executed on the raven site

(instead of the localhost site). We can specify the location of

each app in the third field of the apps file, with either an

absolute pathname or the name of an executable to be located in

PATH). Here we use the latter form:

$ cat apps

raven simulate simulate.sh

raven stats stats.shYou can experiment, for example, with an alternate version of stats.sh by specfying that app’s location explicitly:

$ cat apps

raven simulate simulate.sh

raven stats /home/users/p01532/bin/my-alt-stats.shWe can see that when we run many apps requesting a larger set of nodes (6), we are indeed running on the compute nodes:

$ swift p4.swift -nsim=1000 -steps=1

Swift 0.94.1 RC2 swift-r6895 cog-r3765

RunID: 20130827-1638-t23ax37a

Progress: time: Tue, 27 Aug 2013 16:38:11 -0500

Progress: time: Tue, 27 Aug 2013 16:38:12 -0500 Initializing:966

Progress: time: Tue, 27 Aug 2013 16:38:13 -0500 Selecting site:499 Submitting:500 Submitted:1

Progress: time: Tue, 27 Aug 2013 16:38:14 -0500 Selecting site:499 Stage in:1 Submitted:500

Progress: time: Tue, 27 Aug 2013 16:38:16 -0500 Selecting site:499 Submitted:405 Active:95 Stage out:1

Progress: time: Tue, 27 Aug 2013 16:38:17 -0500 Selecting site:430 Submitted:434 Active:66 Stage out:1 Finished successfully:69

Progress: time: Tue, 27 Aug 2013 16:38:18 -0500 Selecting site:388 Submitted:405 Active:95 Stage out:1 Finished successfully:111

...

Progress: time: Tue, 27 Aug 2013 16:38:30 -0500 Stage in:1 Submitted:93 Active:94 Finished successfully:812

Progress: time: Tue, 27 Aug 2013 16:38:31 -0500 Submitted:55 Active:95 Stage out:1 Finished successfully:849

Progress: time: Tue, 27 Aug 2013 16:38:32 -0500 Active:78 Stage out:1 Finished successfully:921

Progress: time: Tue, 27 Aug 2013 16:38:34 -0500 Active:70 Stage out:1 Finished successfully:929

Progress: time: Tue, 27 Aug 2013 16:38:37 -0500 Stage in:1 Finished successfully:1000

Progress: time: Tue, 27 Aug 2013 16:38:38 -0500 Stage out:1 Finished successfully:1000

Final status: Tue, 27 Aug 2013 16:38:38 -0500 Finished successfully:1001

$ grep "on node:" output/*log | head

output/sim_0.log:Running on node: nid00063

output/sim_100.log:Running on node: nid00060

output/sim_101.log:Running on node: nid00061

output/sim_102.log:Running on node: nid00032

output/sim_103.log:Running on node: nid00060

output/sim_104.log:Running on node: nid00061

output/sim_105.log:Running on node: nid00032

output/sim_106.log:Running on node: nid00060

output/sim_107.log:Running on node: nid00061

output/sim_108.log:Running on node: nid00062

$ grep "on node:" output/*log | awk '{print $4}' | sort | uniq -c

158 nid00032

156 nid00033

171 nid00060

178 nid00061

166 nid00062

171 nid00063

$ hostname

raven

$ hostname -f

nid00008Swift’s sites.xml configuration file allows many parameters to

specify how jobs should be run on a given cluster. Consider for

example that Raven has several queues, each with limitiations on the

size of jobs that can be run in them. All Raven queues will only run

2 jobs per user at one. The Raven queue "small" will only allow up to

4 nodes per job and 1 hours of walltime per job. The following

site.xml parameters will allow us to match this:

<profile namespace="globus" key="queue">small</profile>

<profile namespace="globus" key="slots">2</profile>

<profile namespace="globus" key="maxNodes">4</profile>

<profile namespace="globus" key="nodeGranularity">4</profile>To run large jobs, we can specify:

<profile namespace="globus" key="queue">medium</profile>

<profile namespace="globus" key="slots">2</profile>

<profile namespace="globus" key="maxNodes">8</profile>

<profile namespace="globus" key="nodeGranularity">8</profile>

<profile namespace="karajan" key="jobThrottle">50.0</profile>

<profile namespace="globus" key="maxTime">21600</profile>

<profile namespace="globus" key="lowOverAllocation">10000</profile>

<profile namespace="globus" key="highOverAllocation">10000</profile>This will enable 512 Swift apps (2 x 8 x 32) to run concurrently within 2 8-node jobs on Raven’s 32-core nodes. It results in the following two PBS jobs submitted by Swift to "provision" compute nodes to run thousands of apps, 512 at a time:

$ qstat -u $USER

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

--------------- -------- -------- ---------- ------ --- --- ------ ----- - -----

288637.sdb p01532 medium B0827-2703 -- 8 256 -- 05:59 Q --

288638.sdb p01532 medium B0827-2703 -- 8 256 -- 05:59 Q --The following section is a summary of the important sites.xml

attributes for running apps on Cray systems. Many of these attributes

can be set the same for all Swift users of a given system; only a few

of the attributes need be overridden by users. We explain these

attributes in detail here to show the degree of control afforded by

Swift over application execution. Most users will use templates for a

given Cray system, only changing a few parameters to meet any unique

needs of their application workflows.

The additional attributes in the sites.xml file (described here

without their XML formatting) specify that Swift should run

applications on Raven in the following manner:

execution provider coaster, jobmanager local:pbs specifies that

Swift should run apps using its "coaster" provider, which submits

"pilot jobs" using qsub. These pilot jobs hold on to compute nodes and

allow Swift to run many app invocations within a single job. This

mechanism is described in

this paper from UCC-2011.

profile tags specify additional attributes for the execution

provider. (A "provider" is like a driver which knows how to handle

site-specific aspects of app execution). The attributes are grouped

into various "namespaces", but we can ignore this for now).

The env key PATHPREFIX specifies that our tutorial app directory

(../app) will be placed at the front of PATH to locate the app on

the compute node.

queue small specifies that pilot (coaster) jobs to run apps will be

submitted to Raven’s small queue.

providerAttributes pbs.aprun;pbs.mpp;depth=32 specifies some

Cray-specific attributes: that jobs should use Cray-specific PBS "mpp"

resource attributes (eg mppwidth and mppnppn) and an mppdepth of

32 (because we will be running one coaster process per node, and

Raven’s XE6 dual IL-16 nodes have a depth of 32 processing elements

(PEs).

jobsPerNode 32 tells Swift that each coaster should run up to 32

concurrent apps. This can be reduced to place fewer apps per node, eg

if each app needs more memory (or, rarely, greater than 32, e.g. if the apps are

IO-bound or for benchmark experiments, etc).

slots 2 specifies that Swift will run up to 2 concurrent PBS jobs,

and maxNodes 1 specifies that each of these jobs will request only 1

compute node.

maxWallTime 00:01:00 specifies that Swift should allow each app to

run for up to one minute of walltime within the larger pilot job. In

this example Swift will dynamically determine the total PBS walltime

needed for the pilot job, but this can be specified manually using

attributes maxtime along with highOverAllocation and

lowOverAllocation.

jobThrottle 3.20 specifies that Swift should allow up to 320 apps to

run on the raven site at once. This is typically set to a number

greater than or equal to the number of slots x compute nodes x apps

per node (jobsPerNode attribute).

initialscore 10000 is specified to override Swift’s automatic

throttling, and forces an actual throttle value of approximately

(specifically 1 over) jobThrottle * 100 to be used.

The last two attributes specify where and how Swift should perform

data management. workdirectory /lus/scratch/{env.USER}/swiftwork

specifies where the Swift "application execution sanbox directory"

used for each app will be located. In some situations this can be a

directory local to the compute node (eg, for Cray systems, /dev/shm

or /tmp, if those are writable by user jobs and the nodes have

sufficient space in these RAM-based filesystems).

Finally, stagingMethod sfs specifies that Swift will copy data to

and from the shared file system to the application sandbox

directories.

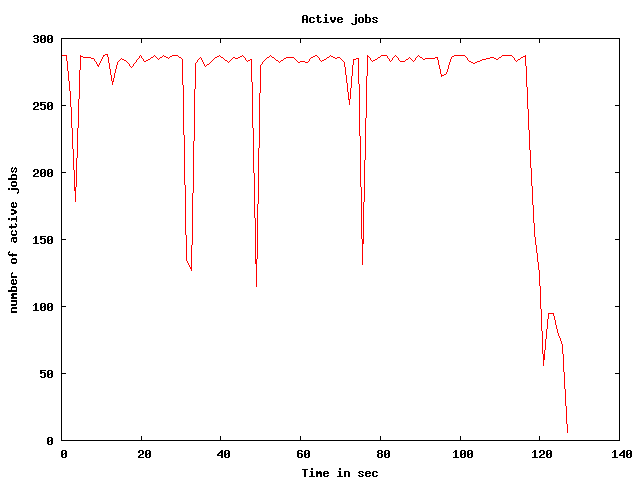

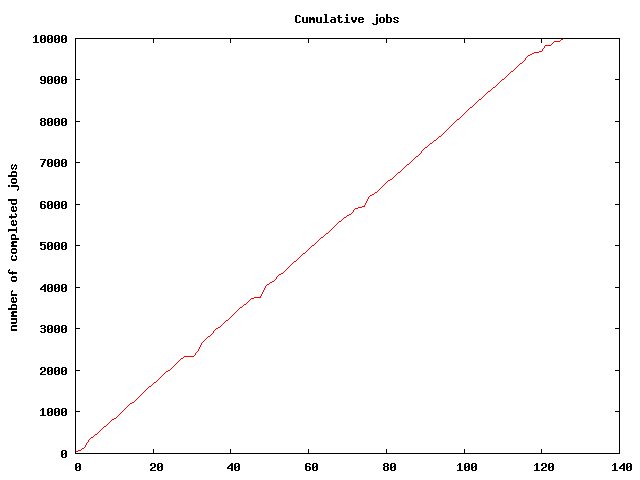

Plotting run activity

The tutorial bin directory in your PATH provides a script

plot.sh to plot the progress of a Swift script. It generates two

image files: activeplot.png, which shows the number of active jobs

over time, and cumulativeplot.png, which shows the total number of

app calls completed as the Swift script progresses.

After each swift run, a log file will be created called

partNN-<YYYYmmdd>-<hhmm>-<random>.log. Once you have identified the

log file name, run the command ./plot.sh <logfile>` (where logfile

is the most recent Swift run log) to generate the plots for that

specific run. For example:

$ ls -lt *.log | head

-rw-r--r-- 1 p01532 61532 2237693 Aug 26 12:45 p4-20130826-1244-kmos0d87.log

-rw-r--r-- 1 p01532 61532 1008 Aug 26 12:44 swift.log

-rw-r--r-- 1 p01532 61532 5345345 Aug 26 12:44 p4-20130826-1243-10u2qdbd.log

-rw-r--r-- 1 p01532 61532 357687 Aug 26 12:00 p4-20130826-1159-j01p4lu0.log

...

$ plot.sh p4-20130826-1244-kmos0d87.logThis yields plots like:

|

|

Because systems like Raven are often firewalled, you may need to use scp to pull these image files back to a system on which you can view them with a browser or preview tool. |

Part 5: Controlling the compute-node pools where applications run

In this section we’ll use the script p5.swift, very similar to

p4.swift of the prior section, to show how we can route apps to

specific sites, and also how we can make multiple pools of resources

(on the same or on different computer systems) available to run a

single Swift script.

First, lets specify that the analysis app stats.sh should be run on

the local login node instead of on the cluster. This is done simply by

change the site name field of the analyze app in the apps file:

$ cat apps

raven simulate simulate.sh

localhost stats stats.shRunning this with swift p5.swift we see:

$ grep "on node:" output/*.log

output/average.log:Running on node: raven

output/sim_0.log:Running on node: nid00029

output/sim_1.log:Running on node: nid00029

output/sim_2.log:Running on node: nid00029

output/sim_3.log:Running on node: nid00029

output/sim_4.log:Running on node: nid00029

output/sim_5.log:Running on node: nid00029

output/sim_6.log:Running on node: nid00029

output/sim_7.log:Running on node: nid00029

output/sim_8.log:Running on node: nid00029

output/sim_9.log:Running on node: nid00029Now lets make further use of Swift’s ability to route specific apps to specific pools of resources. The Cray Raven system has two node types, XE6 32-core 2 x IL-16, and XK7 16-core 1 x IL-16 plus one GPU. Each "pool" of nodes has different queue characteristics. We can define these differences to Swift as two separate pools, and then spread the load of executing a large ensemble of simulations across all the pools. (And we’ll continue to run the analysis script on a third pool, comprising the single login host.)

We use the following apps file:

$ cat multipools

raven simulate simulate.sh

ravenGPU simulate simulate.sh

localhost stats stats.shand we adjust the sites file to specify 4-node jobs in the Raven pool:

<profile namespace="globus" key="maxNodes">4</profile>

<profile namespace="globus" key="nodeGranularity">4</profile>This results in these PBS jobs:

p01532@raven:~> qstat -u $USER

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

--------------- -------- -------- ---------- ------ --- --- ------ ----- - -----

288687.sdb p01532 small B0827-3406 9919 4 128 -- 00:59 R 00:00

288688.sdb p01532 gpu_node B0827-3406 9931 6 96 -- 00:59 R 00:00-

and achieves the following parallelism (of about 224 concurrent app tasks):

$ swift -tc.file multipools p5.swift -nsim=1000 -steps=3

Swift 0.94.1 RC2 swift-r6895 cog-r3765

RunID: 20130827-1829-o3h6mht5

Progress: time: Tue, 27 Aug 2013 18:29:31 -0500

Progress: time: Tue, 27 Aug 2013 18:29:32 -0500 Initializing:997

Progress: time: Tue, 27 Aug 2013 18:29:34 -0500 Selecting site:774 Submitting:225 Submitted:1

Progress: time: Tue, 27 Aug 2013 18:29:35 -0500 Selecting site:774 Stage in:1 Submitted:225

Progress: time: Tue, 27 Aug 2013 18:29:36 -0500 Selecting site:774 Stage in:1 Submitted:37 Active:188

Progress: time: Tue, 27 Aug 2013 18:29:39 -0500 Selecting site:774 Submitted:2 Active:223 Stage out:1

Progress: time: Tue, 27 Aug 2013 18:29:40 -0500 Selecting site:750 Submitted:17 Active:208 Stage out:1 Finished successfully:24

Progress: time: Tue, 27 Aug 2013 18:29:41 -0500 Selecting site:640 Stage in:1 Submitted:51 Active:174 Finished successfully:134

Progress: time: Tue, 27 Aug 2013 18:29:42 -0500 Selecting site:551 Submitted:11 Active:214 Stage out:1 Finished successfully:223

Progress: time: Tue, 27 Aug 2013 18:29:43 -0500 Selecting site:542 Submitted:2 Active:223 Stage out:1 Finished successfully:232

Progress: time: Tue, 27 Aug 2013 18:29:44 -0500 Selecting site:511 Submitting:1 Submitted:19 Active:206 Finished successfully:263

Progress: time: Tue, 27 Aug 2013 18:29:45 -0500 Selecting site:463 Stage in:1 Submitted:43 Active:182 Finished successfully:311

Progress: time: Tue, 27 Aug 2013 18:29:46 -0500 Selecting site:367 Submitting:1 Submitted:38 Active:186 Stage out:1 Finished successfully:407

Progress: time: Tue, 27 Aug 2013 18:29:47 -0500 Selecting site:309 Submitted:2 Active:223 Stage out:1 Finished successfully:465

Progress: time: Tue, 27 Aug 2013 18:29:48 -0500 Selecting site:300 Submitted:2 Active:223 Stage out:1 Finished successfully:474

Progress: time: Tue, 27 Aug 2013 18:29:50 -0500 Selecting site:259 Submitted:11 Active:214 Stage out:1 Finished successfully:515

Progress: time: Tue, 27 Aug 2013 18:29:51 -0500 Selecting site:201 Stage in:1 Submitted:39 Active:186 Finished successfully:573

Progress: time: Tue, 27 Aug 2013 18:29:52 -0500 Selecting site:80 Submitted:42 Active:184 Finished successfully:694

Progress: time: Tue, 27 Aug 2013 18:29:53 -0500 Selecting site:54 Submitted:2 Active:223 Stage out:1 Finished successfully:720

Progress: time: Tue, 27 Aug 2013 18:29:54 -0500 Selecting site:32 Submitted:4 Active:220 Stage out:1 Finished successfully:743

Progress: time: Tue, 27 Aug 2013 18:29:55 -0500 Submitted:3 Active:216 Stage out:1 Finished successfully:780

Progress: time: Tue, 27 Aug 2013 18:29:56 -0500 Stage in:1 Active:143 Finished successfully:856

Progress: time: Tue, 27 Aug 2013 18:29:57 -0500 Active:38 Stage out:1 Finished successfully:961

Progress: time: Tue, 27 Aug 2013 18:29:58 -0500 Active:8 Stage out:1 Finished successfully:991

Progress: time: Tue, 27 Aug 2013 18:29:59 -0500 Stage out:1 Finished successfully:999

Progress: time: Tue, 27 Aug 2013 18:30:01 -0500 Stage in:1 Finished successfully:1000

Progress: time: Tue, 27 Aug 2013 18:30:02 -0500 Active:1 Finished successfully:1000

Progress: time: Tue, 27 Aug 2013 18:30:06 -0500 Stage out:1 Finished successfully:1000

Final status: Tue, 27 Aug 2013 18:30:07 -0500 Finished successfully:1001Part 6: Specifying more complex workflow patterns

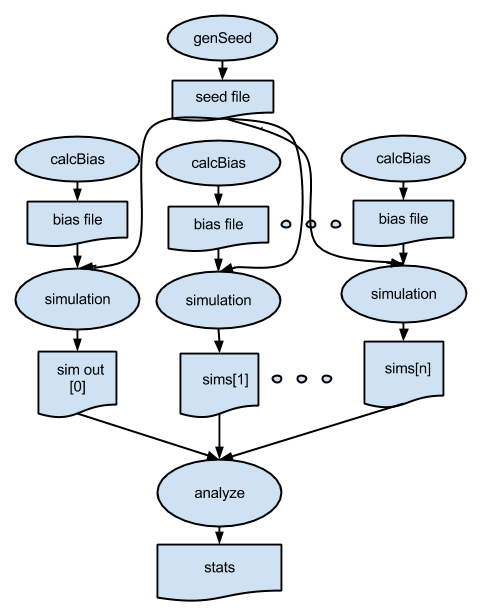

p6.swift expands the workflow pattern of p4.swift to add additional stages to the workflow. Here, we generate a dynamic seed value that will be used by all of the simulations, and for each simulation, we run an pre-processing application to generate a unique "bias file". This pattern is shown below, followed by the Swift script.

type file;

# app() functions for application programs to be called:

app (file out) genseed (int nseeds)

{

genseed "-r" 2000000 "-n" nseeds stdout=@out;

}

app (file out) genbias (int bias_range, int nvalues)

{

genbias "-r" bias_range "-n" nvalues stdout=@out;

}

app (file out, file log) simulation (int timesteps, int sim_range,

file bias_file, int scale, int sim_count)

{

simulate "-t" timesteps "-r" sim_range "-B" @bias_file "-x" scale

"-n" sim_count stdout=@out stderr=@log;

}

app (file out, file log) analyze (file s[])

{

stats @filenames(s) stdout=@out stderr=@log;

}

# Command line arguments

int nsim = @toInt(@arg("nsim", "10")); # number of simulation programs to run

int steps = @toInt(@arg("steps", "1")); # number of timesteps (seconds) per simulation

int range = @toInt(@arg("range", "100")); # range of the generated random numbers

int values = @toInt(@arg("values", "10")); # number of values generated per simulation

# Main script and data

tracef("\n*** Script parameters: nsim=%i range=%i num values=%i\n\n", nsim, range, values);

file seedfile<"output/seed.dat">; # Dynamically generated bias for simulation ensemble

seedfile = genseed(1);

int seedval = readData(seedfile);

tracef("Generated seed=%i\n", seedval);

file sims[]; # Array of files to hold each simulation output

foreach i in [0:nsim-1] {

file biasfile <single_file_mapper; file=@strcat("output/bias_",i,".dat")>;

file simout <single_file_mapper; file=@strcat("output/sim_",i,".out")>;

file simlog <single_file_mapper; file=@strcat("output/sim_",i,".log")>;

biasfile = genbias(1000, 20);

(simout,simlog) = simulation(steps, range, biasfile, 1000000, values);

sims[i] = simout;

}

file stats_out<"output/average.out">;

file stats_log<"output/average.log">;

(stats_out,stats_log) = analyze(sims);Note that the workflow is based on data flow dependencies: each simulation depends on the seed value, calculated in these two dependent statements:

seedfile = genseed(1);

int seedval = readData(seedfile);and on the bias file, computed and then consumed in these two dependent statements:

biasfile = genbias(1000, 20);

(simout,simlog) = simulation(steps, range, biasfile, 1000000, values);To run:

$ cd ../part06

$ swift p6.swiftThe default parameters result in the following execution log:

$ swift p6.swift

Swift 0.94.1 RC2 swift-r6895 cog-r3765

RunID: 20130827-1917-jvs4gqm5

Progress: time: Tue, 27 Aug 2013 19:17:56 -0500

*** Script parameters: nsim=10 range=100 num values=10

Progress: time: Tue, 27 Aug 2013 19:17:57 -0500 Stage in:1 Submitted:10

Generated seed=382537

Progress: time: Tue, 27 Aug 2013 19:17:59 -0500 Active:9 Stage out:1 Finished successfully:11

Final status: Tue, 27 Aug 2013 19:18:00 -0500 Finished successfully:22which produces the following output:

$ ls -lrt output

total 264

-rw-r--r-- 1 p01532 61532 9 Aug 27 19:17 seed.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_9.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_8.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_7.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_6.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_5.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_4.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_3.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_2.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_1.dat

-rw-r--r-- 1 p01532 61532 180 Aug 27 19:17 bias_0.dat

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:17 sim_9.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:17 sim_9.log

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:17 sim_8.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:17 sim_7.out

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:17 sim_6.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:17 sim_6.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:17 sim_5.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:17 sim_5.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:17 sim_4.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:17 sim_4.log

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:17 sim_1.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:18 sim_8.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:18 sim_7.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:18 sim_3.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:18 sim_3.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:18 sim_2.out

-rw-r--r-- 1 p01532 61532 14898 Aug 27 19:18 sim_2.log

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:18 sim_1.out

-rw-r--r-- 1 p01532 61532 90 Aug 27 19:18 sim_0.out

-rw-r--r-- 1 p01532 61532 14897 Aug 27 19:18 sim_0.log

-rw-r--r-- 1 p01532 61532 9 Aug 27 19:18 average.out

-rw-r--r-- 1 p01532 61532 14675 Aug 27 19:18 average.logEach sim_N.out file is the sum of its bias file plus newly "simulated" random output scaled by 1,000,000:

$ cat output/bias_0.dat

302

489

81

582

664

290

839

258

506

310

293

508

88

261

453

187

26

198

402

555

$ cat output/sim_0.out

64000302

38000489

32000081

12000582

46000664

36000290

35000839

22000258

49000506

75000310We produce 20 values in each bias file. Simulations of less than that number of values ignore the unneeded number, while simualtions of more than 20 will use the last bias number for all remoaining values past 20. As an exercise, adjust the code to produce the same number of bias values as is needed for each simulation. As a further exercise, modify the script to generate a unique seed value for each simulation, which is a common practice in ensemble computations.